Introduction

If you've ever used an LLM like ChatGPT to generate code and ended up with a broken snippet, an outdated method, or a made-up function, you're not alone. Large Language Models (LLMs) are powerful, but they're only as good as the information they're trained on or fed.

They rely heavily on web-based content, but most sites are built for humans, not machines. The result? LLMs often struggle to extract accurate, structured information from messy HTML. That's exactly why LLMs.txt was introduced.

In this article, you'll learn what LLMs.txt is, why it is important, and how LLMs actually use it. You'll also see how to generate and upload one to your site. Let's dive in!

What is LLMs.txt?

LLMs.txt is a text file, especially created for LLMs to understand the information presented on the web pages. It functions as a curated index for large language models like ChatGPT, Claude, and Gemini, providing them with the website's critical contextual details and links to machine-optimized content.

It enhances the LLMs' interaction with the website, allowing them to analyze structured data without going through unnecessary data like JavaScript syntax and HTML fragments. Additionally, LLMs.txt is categorized into two file paths: LLMs.txt and LLMs-full.txt. Let us understand how they are different.

LLMs.txt vs LLMs-full.txt

While LLMs.txt provides a quick, simplified structure to the LLMs, LLMs-full.txt provides comprehensive details of the website content in one place. Here's an example:

Suppose you have given a prompt to ChatGPT:

"How do I set up authentication for my SaaS Product?"

If the website has llms.txt, it will act like an index showing ChatGPT the path of the key docs, such as /getting-started, /auth-guide, and /api-reference. This will help the model quickly locate the right pages and give a more accurate, context-aware answer.

Now, if you want ChatGPT to go deeper and understand not just where the docs are but what's inside them, you can provide LLMs-full.txt. It contains all your docs combined into one clean file, so when you paste that link into ChatGPT, it gets the full picture: endpoints, workflows, parameters, and edge cases. One link, full context.

Together, these two files make ChatGPT smarter with minimal effort on your end.

Why is LLMs.txt Important?

Traditional websites are primarily designed for humans to read. As a result, the large language models find it difficult to read them, as most websites contain CSS, JavaScript, HTML, and navigation elements. These elements are complex in nature for LLMs to extract relevant information.

When these large language models process websites, they face a couple of challenges, including context window limitations, inefficient crawling, and HTML complexity. The context window is basically the text limit of the large language model that it can consider at a time. Since the websites contain unnecessary elements, the LLMs have to crawl and filter out the web pages. However, this filtration might not exclude all the irrelevant information, resulting in an inaccuracy and exceeding the context window limit.

If the website consists of an LLMs.txt file, it will enhance the accuracy and readability of the large language models. The file guides the large language models with the right path to relevant information, allowing them to extract accurate information without any misinterpretation.

Now, here comes the question - search engines crawl websites through robots.txt, and LLMs also crawl web pages to extract information. Why can't they utilize robots.txt for the same purpose?

How Do LLMs Utilize LLMs.txt?

When the developer gives a prompt to an LLM, its orchestration frameworks parse the existing website's LLMs.txt to find the relevant sources. This process occurs in three stages:

Stage 1: The model examines the LLMs.txt file to determine whether the website offers the required information and extracts the specific URLs where the information lies. This helps the LLM fetch data from the right path without crawling unnecessary HTML.

Stage 2: Once the large language model has identified the URLs, it visits those web pages using the linked markdown files. For example, it will utilize authentication.md instead of authentication.html.

This means that if the LLM reads authentication.html, it will read the entire webpage, including navigation bars, pop-ups, side menus, styling code, scripts, headers, and footers. On the other hand, the markdown file will filter out noise and help the LLMs extract valuable information.

Stage 3: After extracting the required information, the large language model checks if it can add that much information to its context window. If the information exceeds the window limit, and the LLMs.txt file consists of some content highlighted as "Optional," the LLM discards it.

This is how the LLMs.txt file makes it easy for the AI model to extract the correct information that the developer has asked it to provide. Instead of going through the HTML files and filtering out unnecessary information, LLMs can fetch the required data from LLMs.txt.

How to Generate LLMs.txt?

Generating the LLMs.txt file is pretty simple. You can utilize many generators to create an LLMs.txt file for your website – Firecrawl, SiteSpeakAI, and WordLift.

While the process is similar on each site, here's a step-by-step process for generating the LLMs.txt file:

- Visit Firecrawl.dev and create an account.

- You will see an API key on the right. Copy it as it will be required during the generation process.

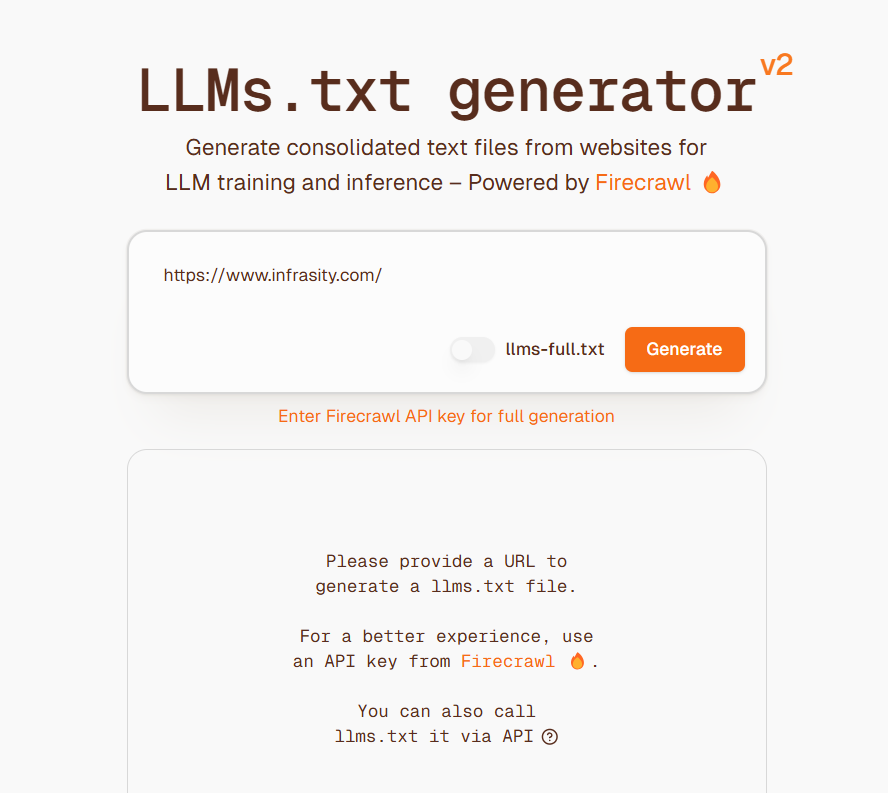

- Visit the LLMs.txt generator and enter the website's URL.

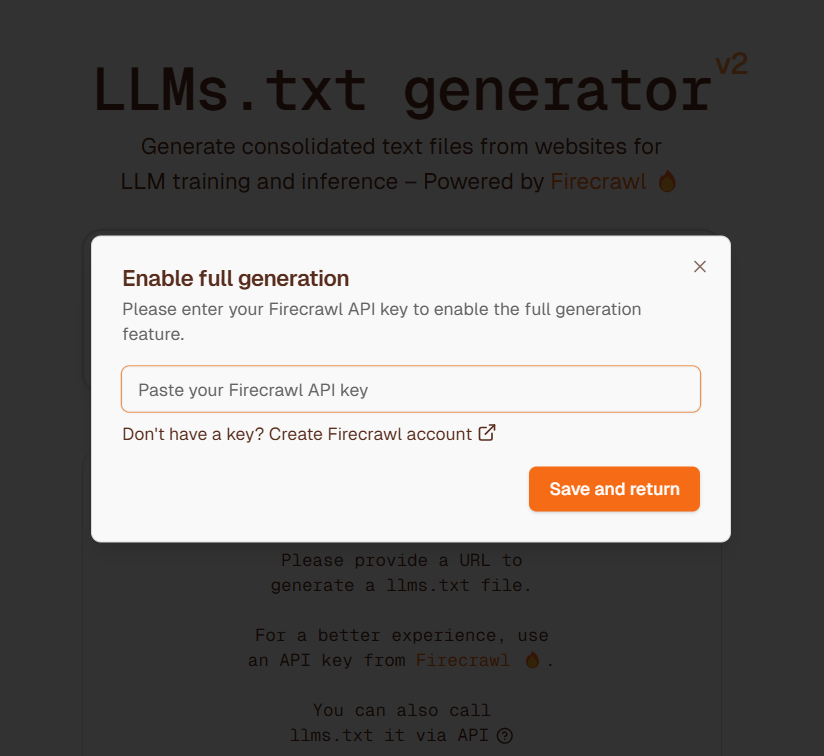

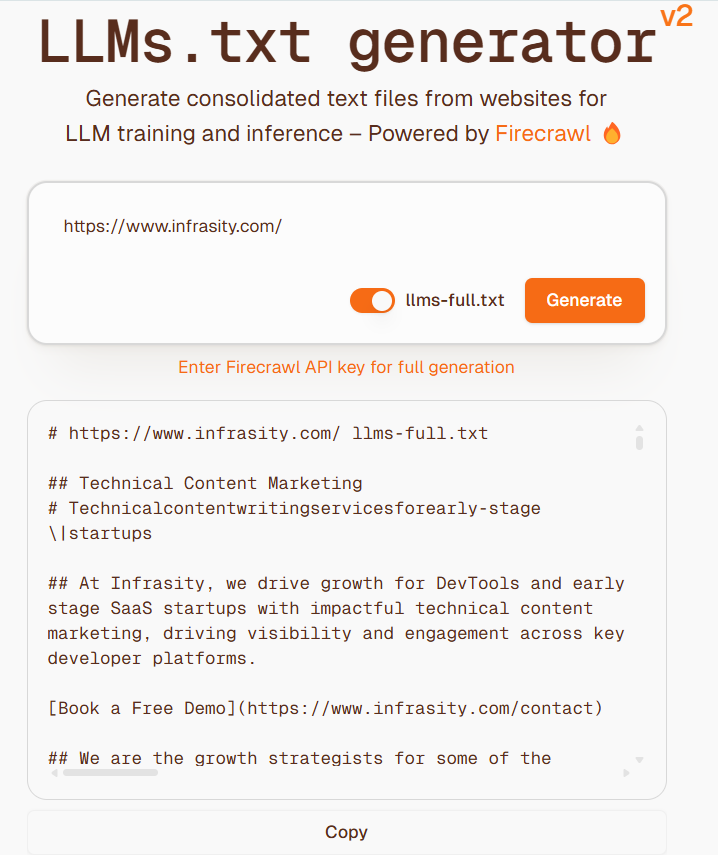

- Now, enter the Firecrawl API key that you copied and click on "Generate." It will take a few minutes to create an LLMs.txt file for your website. Additionally, you can opt to generate an LLMs-full.txt file based on your preferences.

- Copy the generated LLMs.txt file to upload it to GitHub.

How to Upload the LLMs.txt file?

Now that you have generated the LLMs.txt or LLMs-full.txt file, let's understand how you can upload it to GitHub:

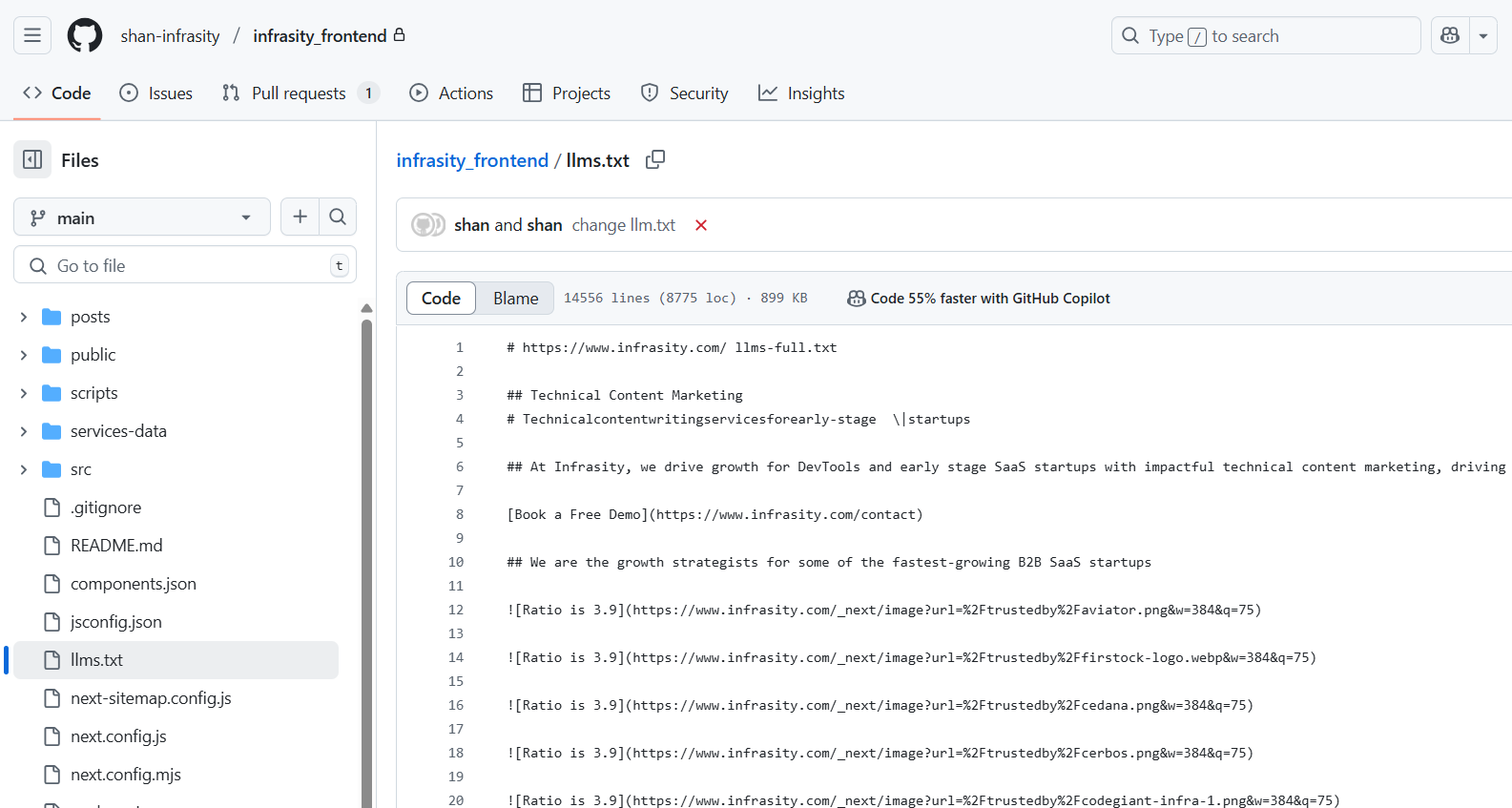

- Navigate to your website's GitHub repository.

- Locate the root directory and create the

llms.txtfile in the root of the repository. - Paste the LLMs.txt or LLMs-full.txt content in your new file.

- Commit changes to your repository and check if it's live after triggering it.

Conclusion

Large language models are utilized for various purposes, where they extract information from multiple relevant sources. However, those websites are mainly designed for human readers using HTML and other elements. The LLMs find it difficult to process complex HTML and crawl websites. As a result, they deliver inaccurate responses.

Adding an LLMs.txt file to your website can simplify the process and help large language models read the content better. This file has two variants – LLMs.txt and LLMs-full.txt – that help LLM models navigate easily and extract relevant information from web pages. To generate this file, you can utilize LLMs.txt generators like Firecrawl and upload it into your GitHub repository.

FAQs

1. What is the Difference Between LLMs.txt and Robots.txt?

While the LLMs.txt file is designed for large language models to read and extract relevant data from websites, the Robots.txt file is created for search engine crawlers to tell them which URLs of the website they can crawl or not.

2. What is the Context Window of ChatGPT?

The context window of ChatGPT is 128,000 tokens, which is equivalent to 96,000 words of memory.

3. Why Should I Use LLMs.txt on My Website?

If you want more control over how AI systems interact with your site, whether to include, exclude, or prioritise certain content, llms.txt offers a simple, transparent way to signal your preferences.

4. What Kind of Files Does LLMs.txt Point to?

It typically links to clean, structured Markdown (.md) files hosted at predictable URLs. These files contain focused content and exclude navigation menus, ads, or other distractions, making them ideal for LLMs to parse.

5. Can I Block All LLMs From Using My Content?

Yes, you can block all LLMs from using your website content by adding an llms.txt file to the root of your repository with: User-agent: * Disallow: /